- Dołączył

- 26 Maj 2015

- Posty

- 19243

- Reakcje/Polubienia

- 56077

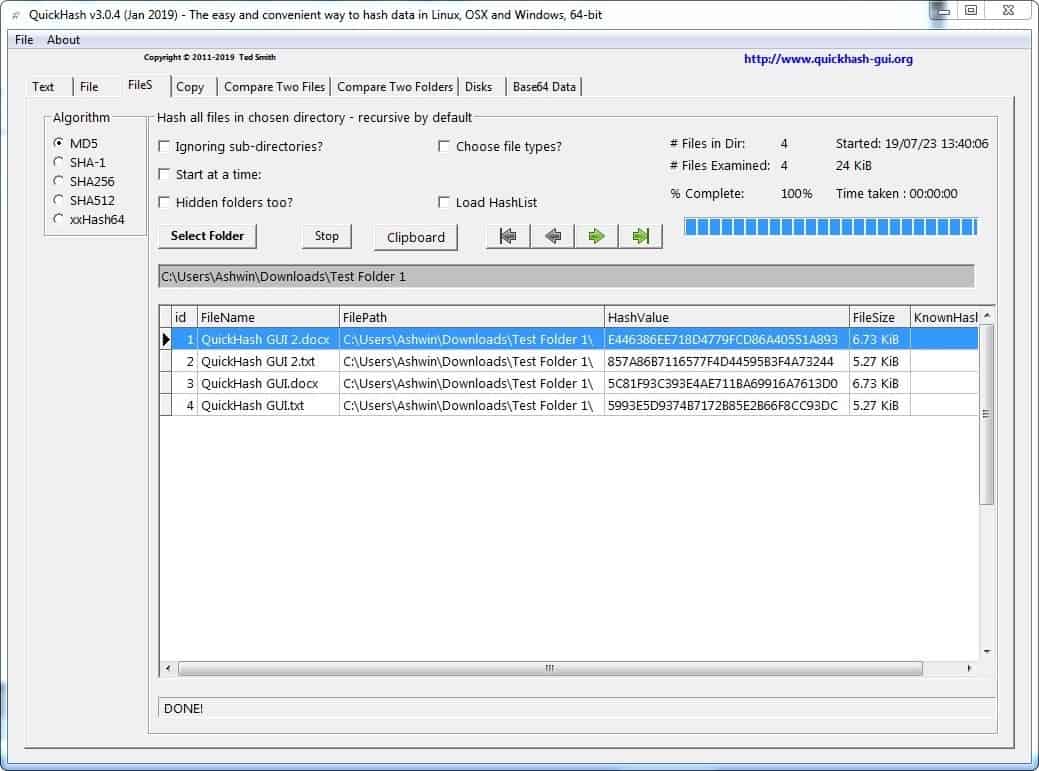

źródło:QuickHash GUI to otwarty interfejs graficzny dla Linuxa, Windowsa i Apple Mac OSX, który umożliwia łatwe i szybkie haszowanie danych: tekstu, plików tekstowych wiersz po wierszu, plików binarnych, porównania plików, porównania folderów, dysków i woluminów dysków (jak administrator), danych Base64, a także pozwala na kopiowanie plików w jednym folderze do innego przy użyciu haszowania danych po obu stronach w celu porównania i integralności danych.

Program pierwotnie został zaprojektowany dla Linuksa, ale obecnie jest również dostępny dla Windows i Apple Mac OSX. Dostępne są algorytmy skrótu MD5, SHA1, SHA256, SHA512 i xxHash.

Zaloguj

lub

Zarejestruj się

aby zobaczyć!

Zaloguj

lub

Zarejestruj się

aby zobaczyć!

Pobieranie:

Zaloguj

lub

Zarejestruj się

aby zobaczyć!